Learn about Computer Vision, which is a field of Computer Science that works on enabling computers to see, identify, and process in the same way that human vision does.

This article is about Computer Vision, which is a field of Computer Science that works on enabling computers to see, identify, and process in the same way that human vision does. It then provides an appropriate output. In the era of digitalization, AI’s ability to track moving objects and analyze them plays a very crucial role. Just imagine: smart drones, cars, robots, sports analytics, contact TV, marketing, advertising — the list of use cases is almost endless. The more objects modern AI can track and analyze, the more opportunities we can discover. That is why things like Object Tracking is so important.

Track Them All: Multiple Moving Objects and Their Motion Characteristics

Tracking multiple objects through video is a vital issue in computer vision. It’s used in various video analysis scenarios, such as visual surveillance, sports analysis, robotic navigation, autonomous driving, human-computer interaction, and medical visualization. In cases of monitoring objects of a certain category, such as people or cars, detectors used to make tracking easier. Usually, it is done in two steps: Detecting and Tracking.

Object Detection

Tracking an object requires the installation of bounding boxes around that object in the image. For this purpose, Object Detection is used. It identifies and indicates a location of objects in bounding boxes in an image.

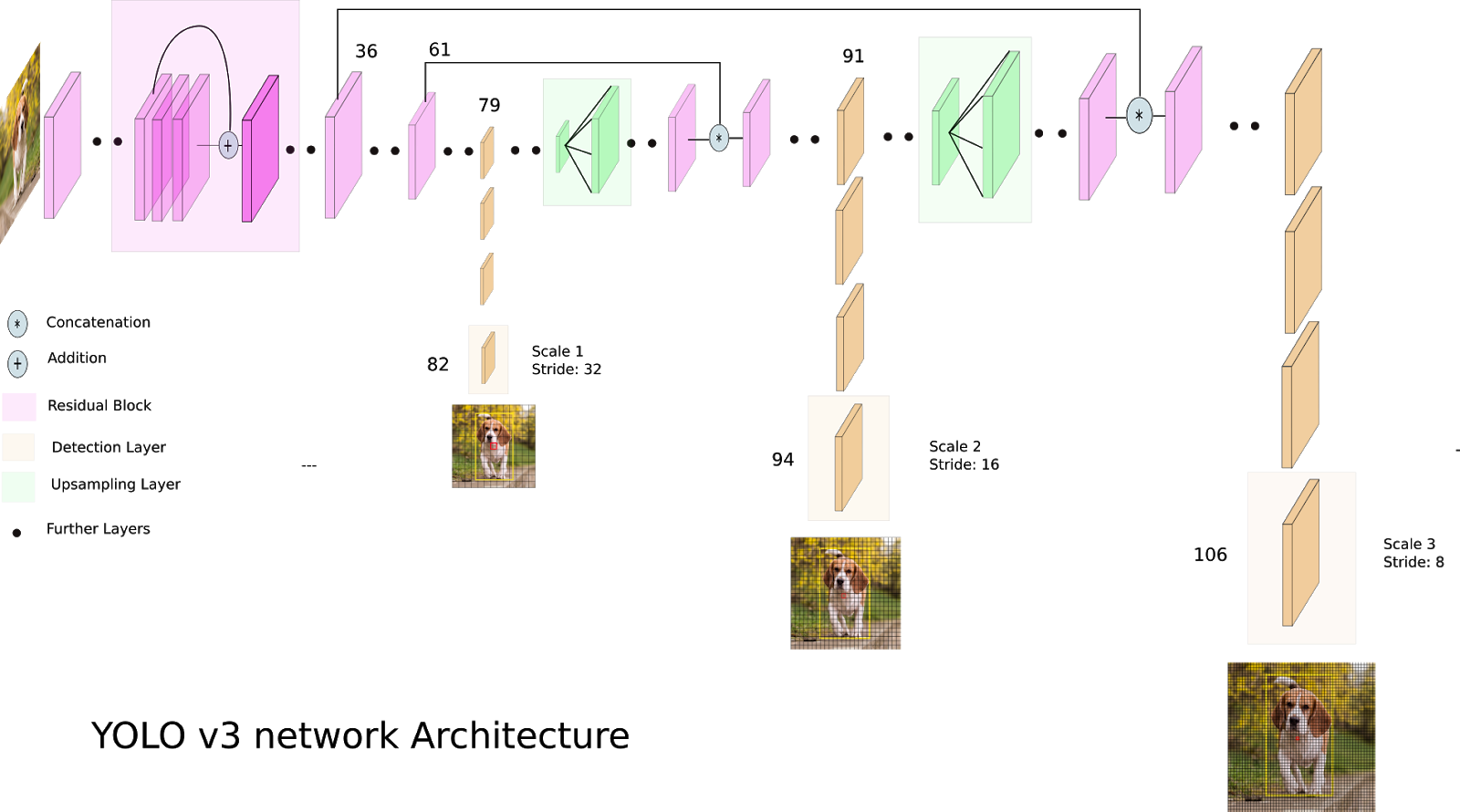

YOLO (You Only Look Once) is a Darknet-based Real-Time Object Detector, which handles this task greatly. The YOLO model scans a certain part of the image only once and does it quickly and without loss of accuracy.

The newest version is YOLOv3 based on the Darknet-53 network and contains 53 convolutional layers. It has sufficiently higher accuracy of detecting and constructing a bounding rectangle. YOLOv3 is significantly exceeding Faster R-CNN and RetinaNet models in processing speed of a single image and handles a video sequence of 10-15 FPS even on the budget segment GPU.

Object Tracking

The basic principles of object tracking are based on the online version of the AdaBoost algorithm, which uses a cascade HAAR detector. This model learns from positive and negative examples of the object. A user, or an object detection algorithm, sets a bounding box — a positive example and image areas outside a bounding box are considered as a negative. On the new frame, the classifier starts in the surrounding area of the previous location and forms an estimate. The new location of the object is where the score is maximum, thus adding another positive example for the classifier. The classifier updates with each new frame arrived.

Kernelized Correlation Filters (KFC) is a tracker with high accuracy and speed. It uses samples from the original positive example area and then forms a mathematical model for overlapping sections for initialization. The tracker can repeat the detection of objects through a fixed period of time to improve accuracy and reinitialize.

To calculate motion characteristics, it is necessary to convert coordinates and trajectories from video coordinates to coordinates of a real scene with the help of homographic transformation.

For sports analysis applications, for example, the real scene is a description of a playing field and its dimensions. This description is translated into a field model, which is analyzed on video frames. A field model is a set of zoning lines and their intersections. The Hough Transform method is used to search for these elements. Next, we compare the found lines with the field model, select the corresponding points, and calculate the projection matrices, with which we transform the screen coordinates into the coordinates of the field model. If some matches are inaccurate, then one of the three robust methods RANSAC, LMeDS and PHO are used. They try many random subsets of the corresponding pairs of points, evaluate the homography matrix using a subset and a simple least squares algorithm, and then calculate the quality of the resulting homography. The best subset is then used to obtain an initial estimate of the homography matrix. And finally, motion characteristics of objects are calculated based on the trajectories and coordinates of objects from the playing field model.

And of course, in order to capture a good-quality video, it’s better to shoot with stationary cameras. If records were made not from fixed cameras, then it’s necessary to remove jitter and displacement effects. In this case, we need to use Motion Compensation with Optical Flow.

I’m always eager to share my best practices and wide open to learn something new, so if you have any questions or ideas — feel free to write to me or leave a comment in the comments section!